BUGLE

Method for Duplicate Defect Report Detection with Transformers

Abstract

This article investigates how to utilize open-source transformers to detect duplicate defect reports

Test Scouts AB, Sweden

Autumn 2024

Introduction

Since numerous software testers work on a project, different users might report the same defect differently in the bug-tracking system. These defect reports must be addressed during the software development life cycle to ensure the quality of the product. However, the more defect reports exist to be solved, the more the maintenance cost increases. Consequently, we think that an automated tool that detects the duplicates or suggests several potential duplicates to the software tester can resolve this bottleneck and enhance the software development life cycle’s effectiveness.

Background Information

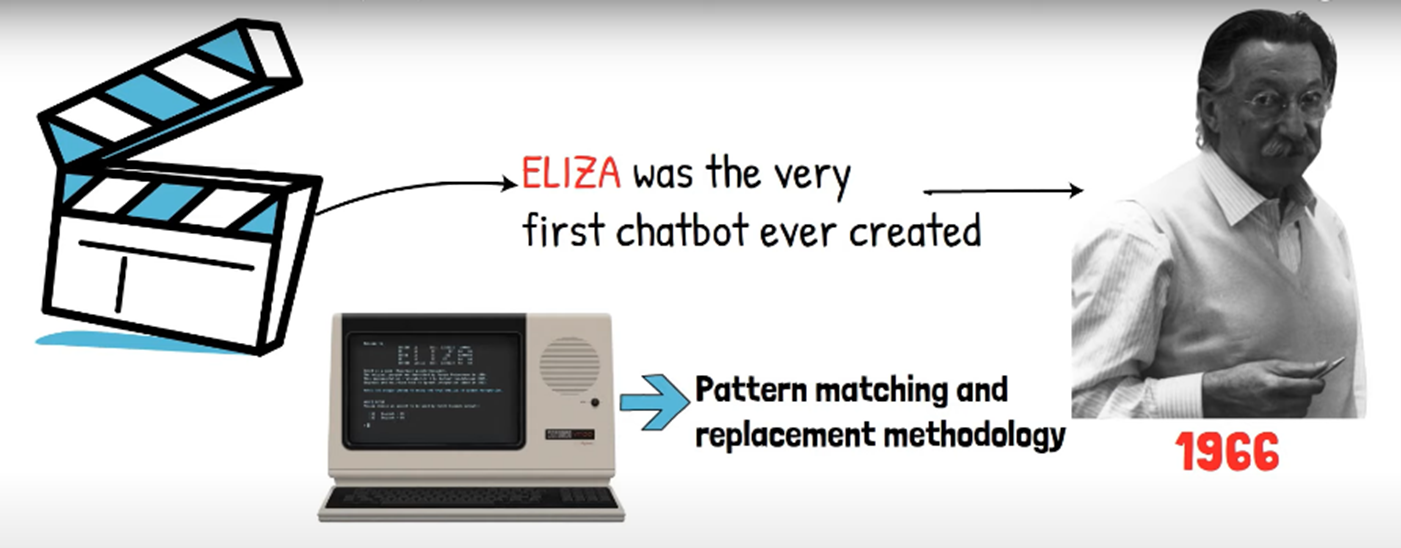

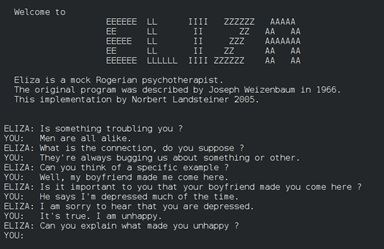

Natural language processing (NLP) is a subfield of Artificial Intelligence (AI) where the goal is enabling computers and digital devices to recognize, to understand and to generate text and speech. For instance, NLP applications are mainly text summarization, translation, voice recognition, sentiment analysis and natural language generation (Holdsworth, 2024). If you have ever used a voice assistant Siri, Alexa, a chatbot like ChatGPT or Gemini or some text autocorrections and autocompletions that means you have already encountered a real-world application of NLP.

Natural language processing (NLP) is a subfield of Artificial Intelligence (AI) where the goal is enabling computers and digital devices to recognize, to understand and to generate text and speech. For instance, NLP applications are mainly text summarization, translation, voice recognition, sentiment analysis and natural language generation (Holdsworth, 2024). If you have ever used a voice assistant Siri, Alexa, a chatbot like ChatGPT or Gemini or some text autocorrections and autocompletions that means you have already encountered a real-world application of NLP.

Until early 2010’s, NLP research and applications were dominated by statistical models like Hidden Markov Models (HMM) and n-grams from statistical language modelling. Naïve Bayes and Support Vector Machines (SVMs) were the methods typically used for text classification and speech recognition.

By the early 2010’s, more and more neural networks and word embeddings have become state-of-the-art. That was because the training process was all about correlating data features with data labels in case of supervised learning and the correlation involved multiplying millions of matrices with each other to arrive at the right result. To increase the training speed, these operations needed to be done in parallel (MSV, 2017). Graphical processing units (GPUs) had a processor that enabled performing millions of matrix multiplications in parallel which supplied necessary computation power of training deep learning applications. Coming together, data availability, computation power and deep learning applications, i.e. Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs), helped step NLP research up to the next level.

A Transformer is a type of neural network architecture that was first introduced in 2017 by Google Brain researchers in the paper called ”Attention is All You Need” (Ashish Vaswani, 2017). A Large Language Model (LLM) is a type of AI model specifically designed to understand, generate, and interact with human language. These models, often built upon Transformer architecture. Advantages that transformer architecture brings:

- Ability to Process Data in Parallel: Unlike RNNs and LSTMs, which process data sequentially (one word at a time), transformers can process entire sequences of data in parallel. Thus, it is efficient to be trained and optimizable through back-propagation.

- Handling of Long-Range Dependencies: Transformers address one of the main limitations of RNNs and LSTMs – difficulty in learning long-range dependencies in a sequence. Therefore, it is expressive thanks to its positional encoding and multi-head attention layers.

BUGLE

BUGLE is an AI-powered software that is designed to help software testers in detecting duplicate defect reports in a database with the overall goal of reducing maintenance cost of an ongoing software development life cycle.

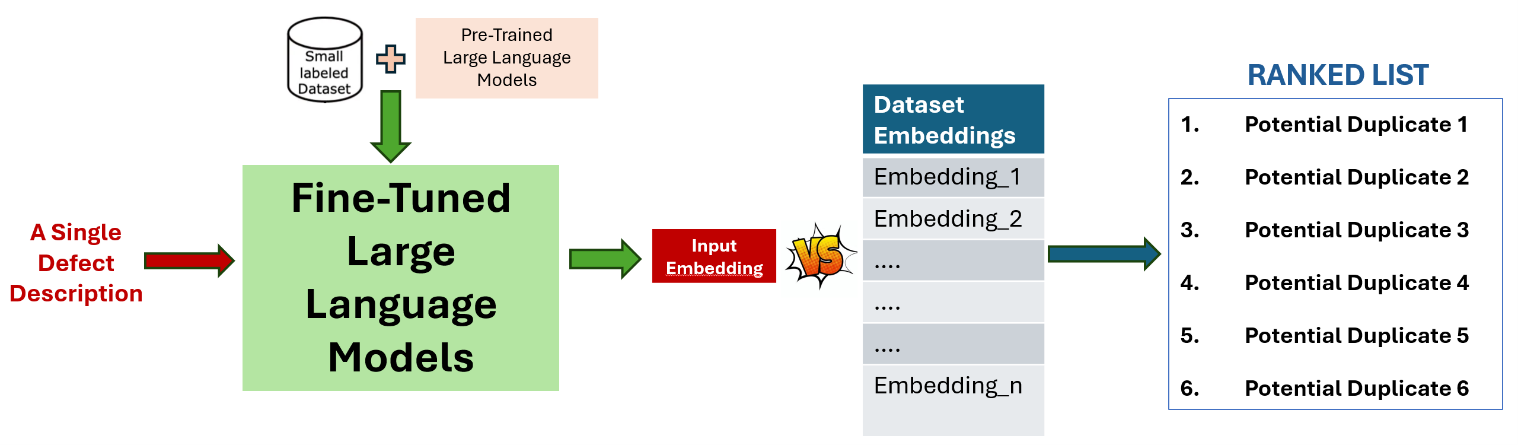

BUGLE utilizes state-of-the-art open-source large language models to look for semantic similarity between some defect reports which are written in natural language by software testers. Semantic understanding is made possible by pre-training of encoder-only transformers where a vast amount of text data on the Internet is used. For example, BERT is trained on the BooksCorpus 800 million words and Wikipedia 2,500 million words (Jacob Devlin, 2019). The creation of contextual token representation generating text embeddings, an array of floating numbers, by the famous neural network architecture, mentioned before, Transformer. Then, BUGLE infers the existence of potential duplicate defect reports based on some similarity metrics, i.e. cosine similarity, between multi-dimensional arrays. Presently, BUGLE capitalizes on finetuning, whereby encoder-only transformers are further trained on defect reports dataset with the hypothesis that finetuning an encoder-only transformer will introduce domain specific knowledge and thus enhance its capability of detecting duplicates.

Bugle Features

Detecting Duplicates in a Dataset

The very first goal is detecting duplicate defect reports in an existing dataset as they cause a major cost in software development lifecycle. BUGLE assesses semantic similarity of each pair of defect reports in the given dataset and suggests k-many pairs sorted from the most likely to least likely where k is a parameter that the user can decide.

Figure 3. Detecting Duplicate in a Dataset Illustration

Controlling New Defect Report

Eliminating duplicate defect reports from a dataset comes with a certain amount of effort, i.e., computation power, time invested during fine-tuning AI models as well as feedback from domain experts. To get the most out of this commitment, it is important to avoid registering new duplicate reports into the dataset. Therefore, we think that controlling whether a new defect report has already existed in the dataset is an undeniable part of BUGLE’s methodology.

BUGLE computes semantic similarity between the new defect report and all defect reports in the dataset and proposes top k-many potential duplicate matches to the user for their final assessment. Similarly, k is a parameter that the user can decide.

Figure 4. Controlling New Defect Report Illustration

Results

We use a dataset belonging to one of the Test Scouts’s industrial partners to assess BUGLE’s performance. The dataset has 1573 non-empty defect reports in total where 193 of them are labeled as Duplicate. Each data sample has a unique ID, a defect description and a status which are written and resolved by professionals.

First Feature: Detecting Duplicates in a Dataset

From a wider viewpoint, BUGLE is a recommendation system, and it is important to examine how well BUGLE can suggest relevant items. There are online metrics and offline metrics to evaluate AI models in a recommendation system. If metrics are collected through experiments with real users, they are called online metrics. Otherwise, they are offline metrics.

Since the industrial dataset is labeled, meaning that known duplicates have a status named “Duplicate”, the dataset contains the ground truths. Secondly, we have not launched BUGLE for real users yet. Therefore, we have decided to evaluate “Detecting Duplicates in a Dataset” feature by recall @K which is indeed an offline metric.

We evaluate our models by recall @K, where K is the number of suggested items, because we would like to answer the following question: Out of top K suggestions made by BUGLE, how many of the duplicate bug reports are retrieved?

There is no golden standard for selecting the value of K, and it depends on the product in place and user’s preferences. Therefore, we define our k values such as the number of known duplicates in a dataset and 300 which is large enough cover top picks and small enough so that 1 person iterate over it in a day.

To be counted as a duplicate, there should be at least 2 defect reports. Assuming there exists n items in the dataset, that would correspond to as known as “Number of combinations of n things taken 2 at a time”. As an example, if n is 1500, there exists 1,124,250 combinations.

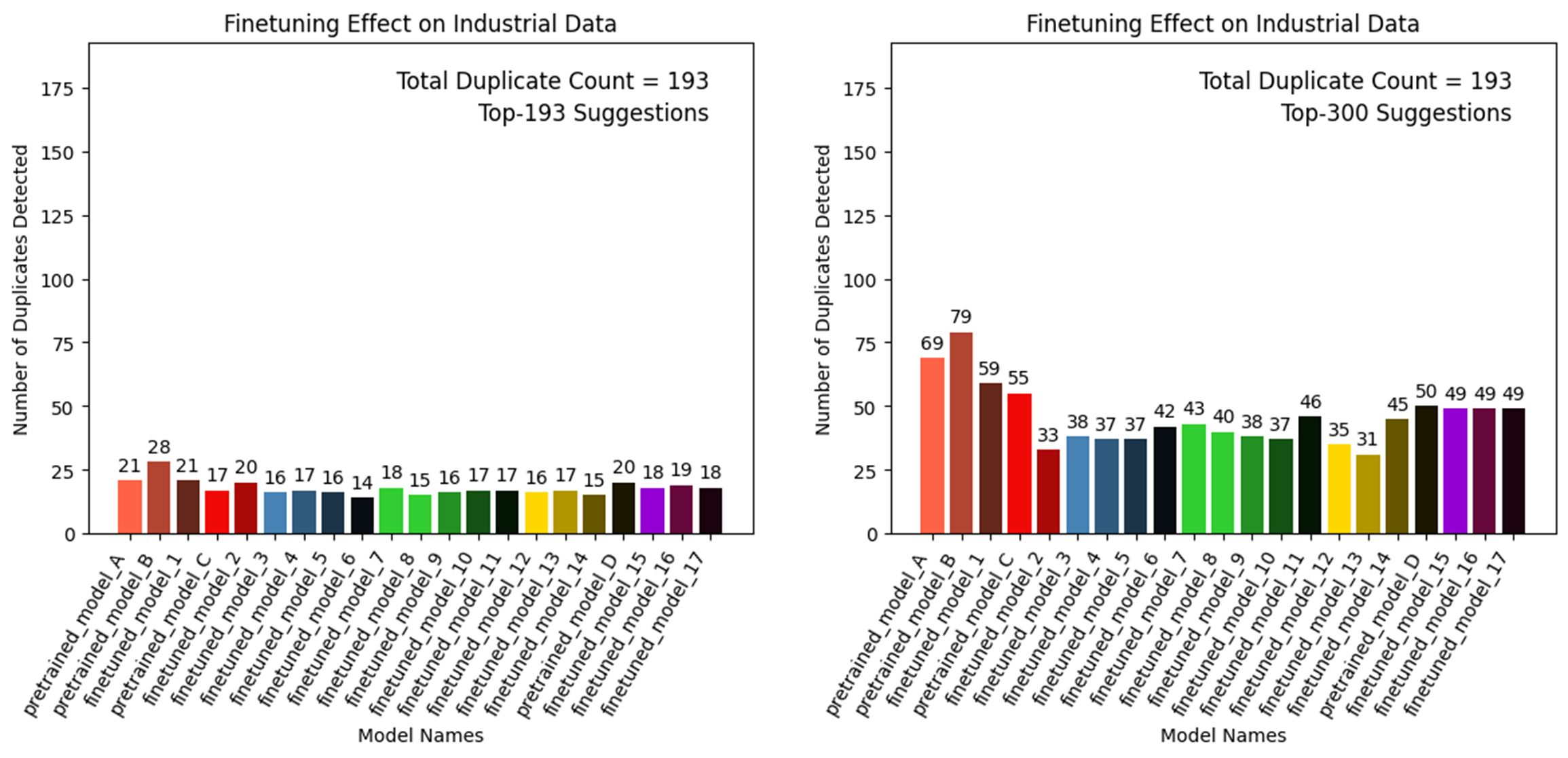

Having said that, our experiments have shown that BUGLE performed 14,5% and 41% respectively at recall@193 and recall@300 for a dataset acquired from an industrial partner of Test Scouts (Figure 5). BUGLE has several open-source transformers models as well as their fine-tuned versions and the best performing model is selected for the duplicate detection.

Figure 5-Find All Duplicate Feature Performance

Second Feature: Controlling New Defect Report

Broadly speaking, semantic analysis relies on how well embeddings represent natural language. Encoder-only transformers utilize tokens to generate embeddings which makes tokenization important. Tokens can be thought of as words or sub-words.

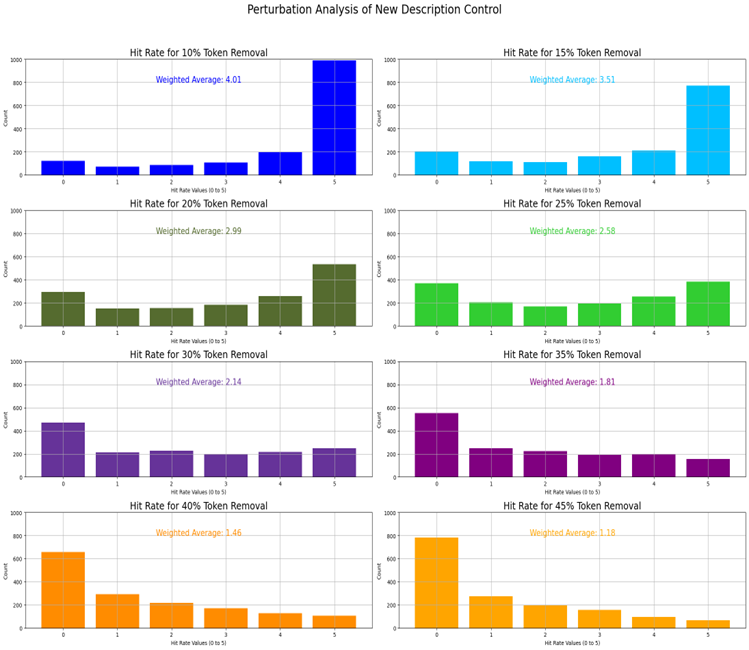

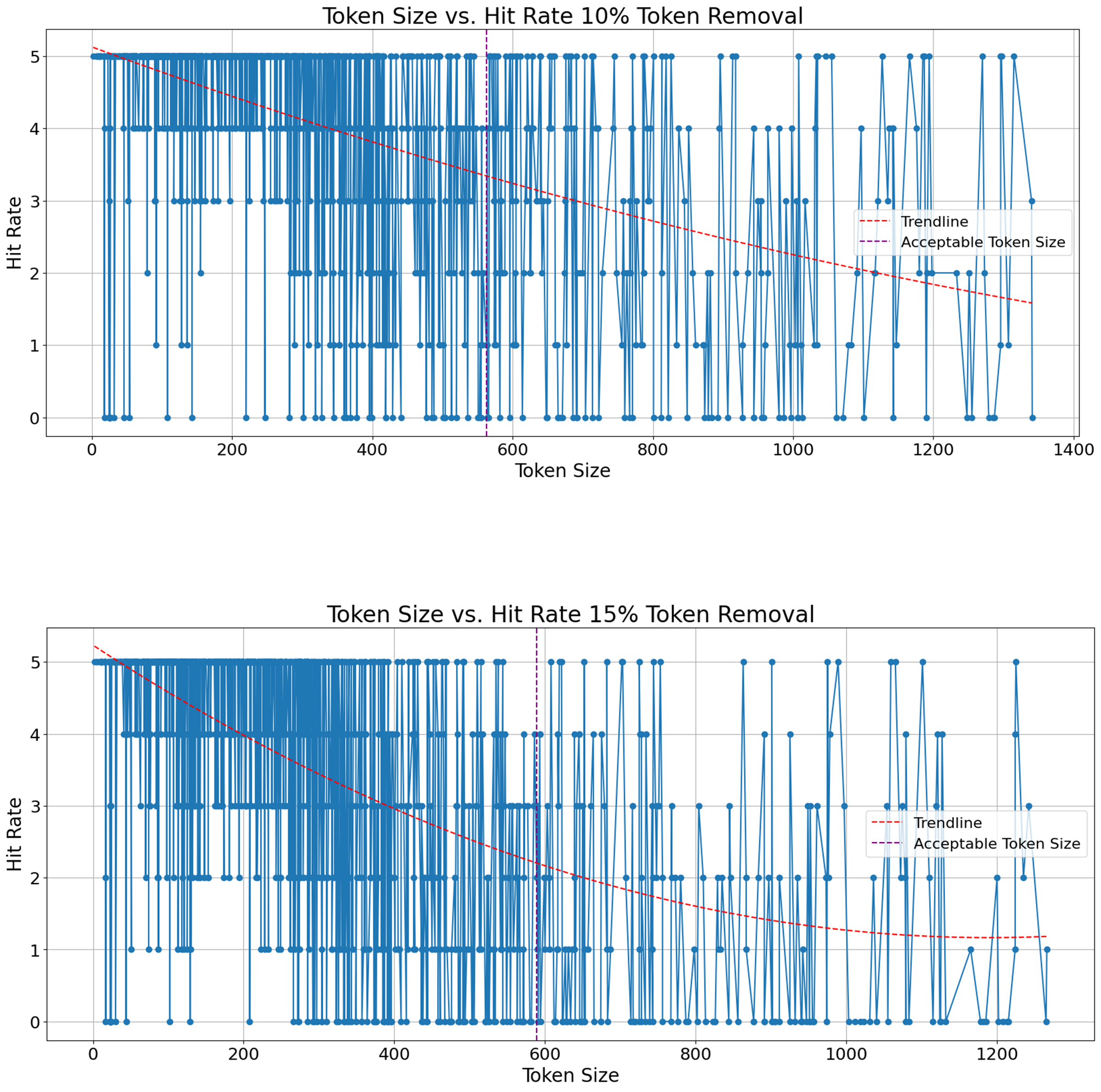

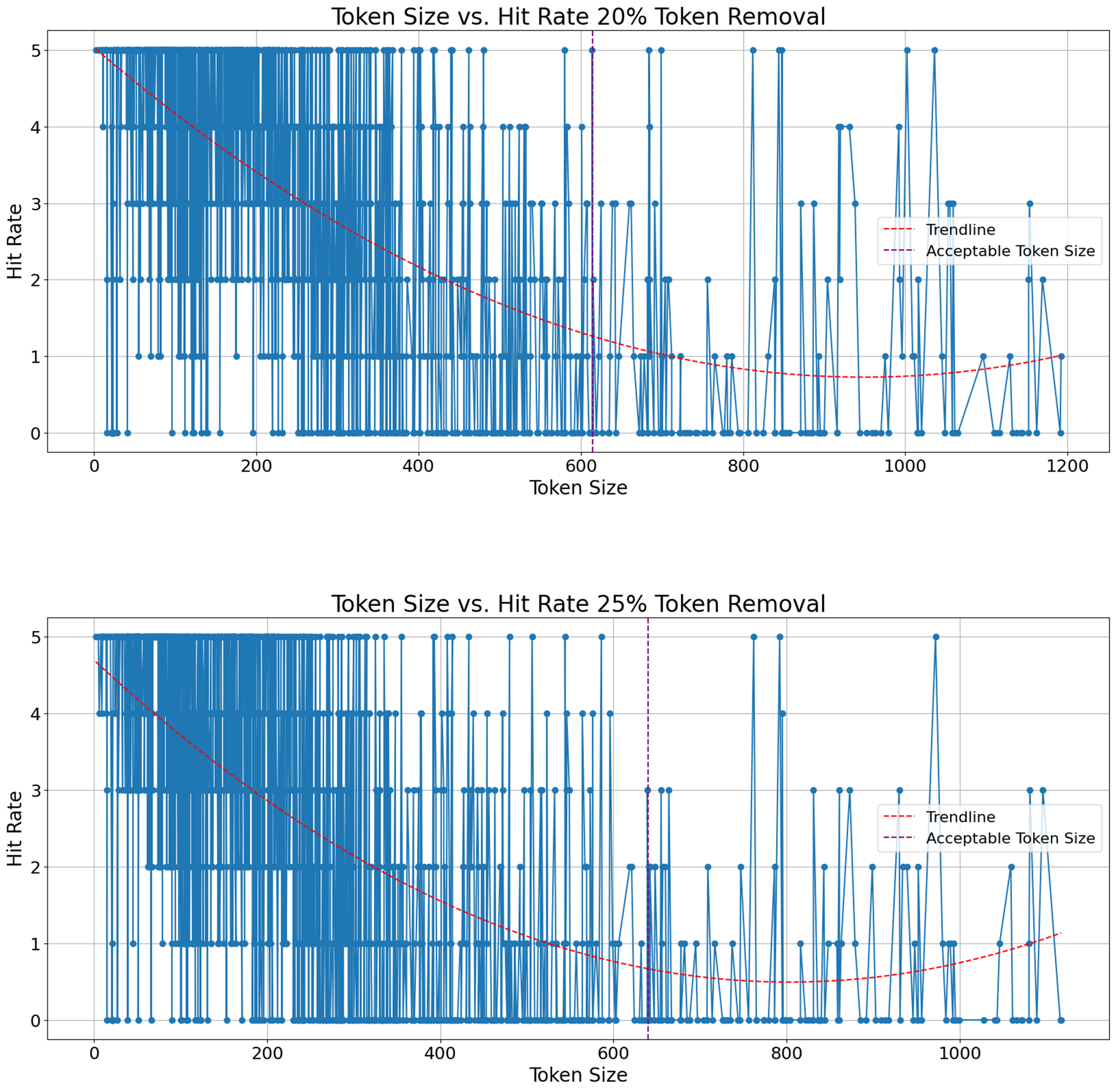

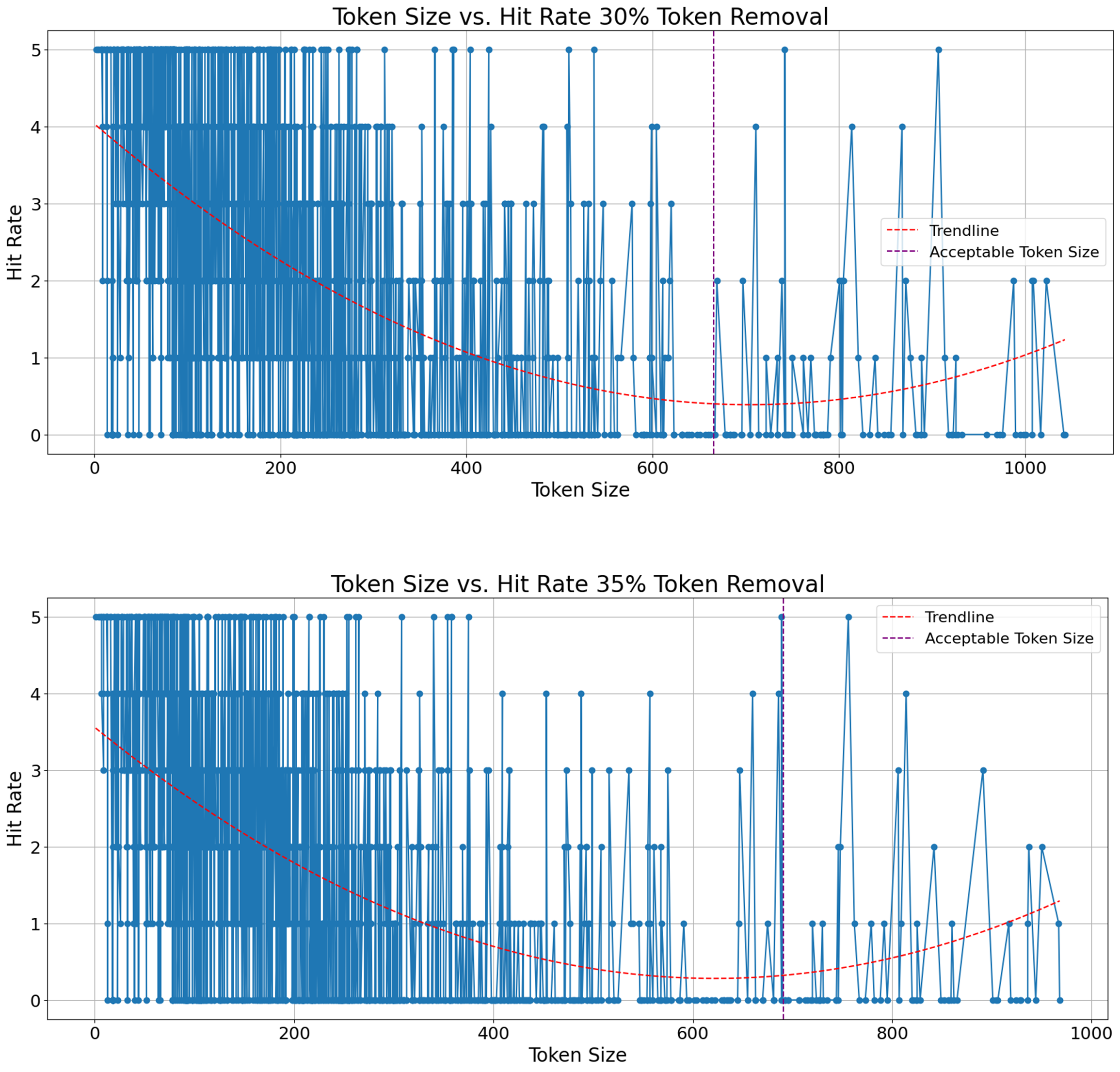

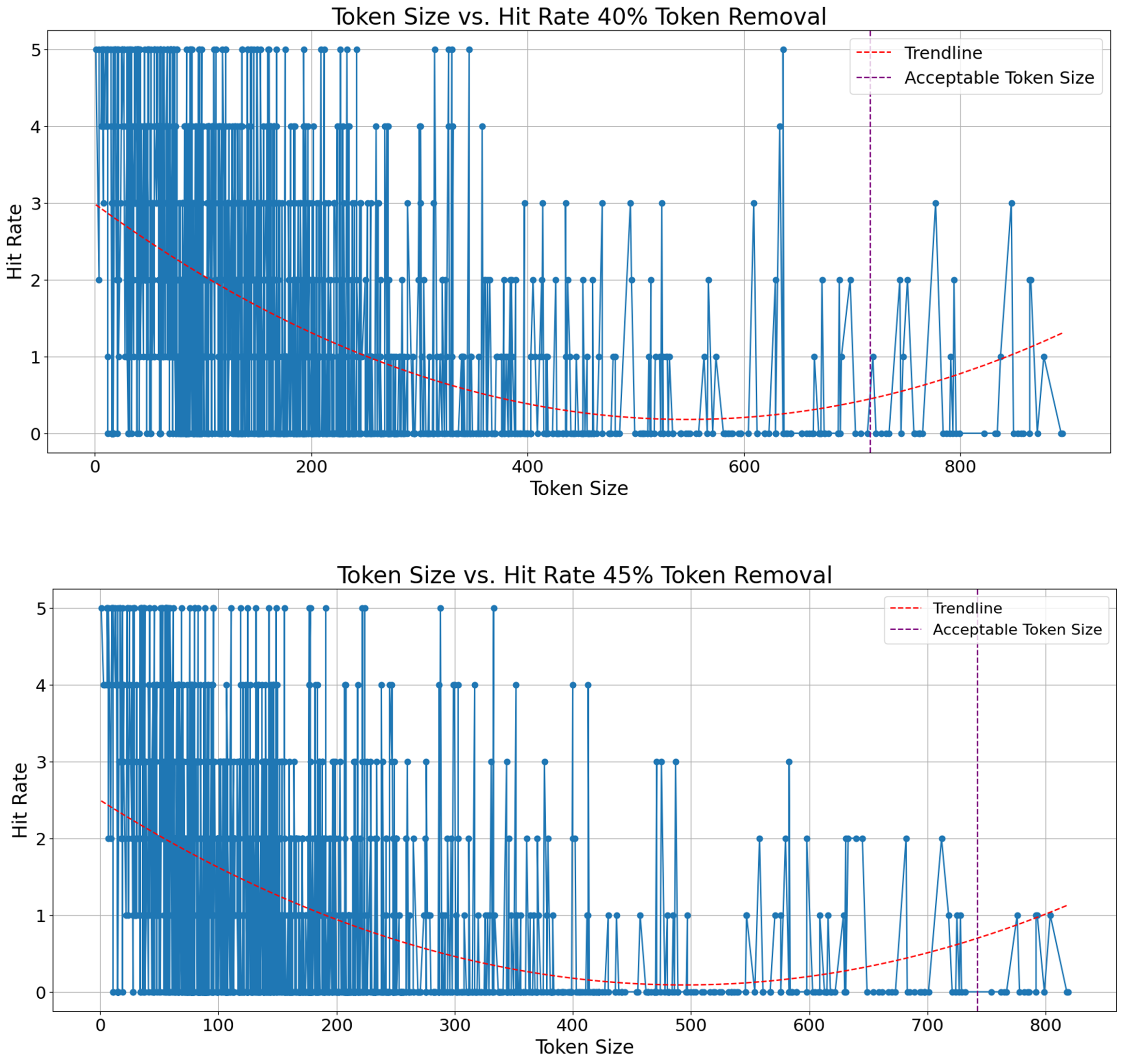

Our testing strategy for controlling new defect reports is perturbation analysis. It helps investigating robustness of BUGLE against distortion applied to defect reports. We introduce distortion by tokenizing input defect report and randomly removing certain percentage of all tokens, i.e., 10%, up to 45%, and reconstructing the defect report with missing tokens. For each defect report we apply this technique 5 times to minimize the effect of randomness in token removal on the results. These distorted defect reports are then re-inputted to BUGLE, and we observe whether their original version appears as the first suggestion. If so, that is a positive hit, otherwise it is a failure. The result of the experiment is demonstrated on Figure 6. Perturbation Analysis. We compute weighted average across hit rate values and as expected the more tokens are removed, the smaller weighted average gets. That is of course not surprising. However, our goal is not finding this obvious trendline rather answering “What is the noise level within our acceptable tolerance range?”. That tolerance level depends on the users as each industry has their own standards although it is worth mentioning that 25% of token removal results in 2.58/5 weighted average score whereas almost 400 out of 1573 defect reports are always correctly retrieved.

We conducted another experiment where we investigated how the number of tokens in a defect report affects this retrieval performance. If there is an optimal length for defect reports, that maximizes duplicate detection, can reshape industrial standards in defect reporting style. In other words, one way to enhance duplicate defect detection can potentially be for professionals to restructure the way they write defect reports. An analogy would be prompt engineering, where users tailor their prompt to the get best possible answers as early as possible in a chat with language models. We demonstrate those results on Figure 8, Figure 9, Figure 10, Figure 11 (See Appendix), where y-axis denotes hit rate out of 5 and x-axis shows number of tokens in a defect report. We fit a second-degree polynomial, which is the red line, to represent trend of hit rate with respect to number of tokens per defect report. The perpendicular purple line to the x-axis shows the maximum number of tokens that is acceptable for the embedder model we use. For example, our embedder model has a fixed maximum token size, i.e. 512, and if removal rate is 10%, any defect report with less than 512 x 1,1 many tokens are properly processed. One takeaway message from these results is that no matter how much noise is introduced, the longer defect report gets, the fewer correct retrieval is achieved. Especially, when number of tokens surpasses the maximum token size our model, the results worsen. Thus, it is possible to infer that defect reports do not necessarily be extra detailed to improve duplicate defect detection by encoder-only transformers. On the other hand, transforming an already existing dataset by reducing tokens in each defect report could be possible by language models whose purpose is summarization. Another solution could be using proprietary models which offer a larger maximum token size for inputs.

Figure 6. Perturbation Analysis

Further Work

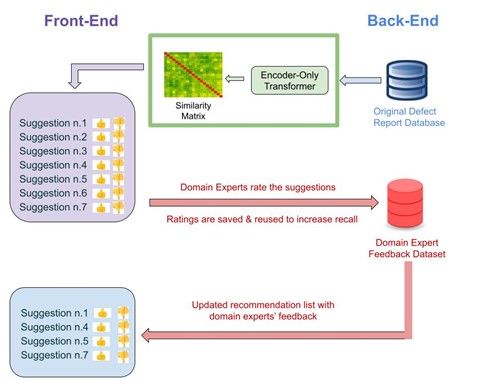

Software testers, quality assurance engineers and product managers have in-depth knowledge about the software/system under test and our hypothesis is that if BUGLE acquires knowledge of domain experts, we will be able to eliminate false positives and its performance will improve. Reinforcement Learning with Human Feedback (RLHF) is a technique that adjusts models’ behavior with its users, and this is how we will introduce domain experts’ knowledge into BUGLE.

Suggesting duplicates while typing a defect report could offer a better user experience in detecting duplicate reports due to its quicker response time. Implementing the front end with the industrial standards will make human feedback in the loop possible and users will get real-time duplicate suggestion.

AI/ML projects are highly data driven and there is no extra motivation needed to emphasize the power of data in 2024. Our envision is to build a data pipeline that improves data quality, optimizes data preprocessing and that integrates well with software used in the industry. Seamless integration will minimize initial efforts and time for our future users.

The big technology companies such as Meta, OpenAI, Mistral, Google and Amazon are in fierce competition in building the most powerful language models as of Autumn 2024 and no surprise their products are the best ones out in the market. They offer embedder models via API connection while preserving data integrity as a service. We know that certain corporations have already started getting this service like in-house ChatGPT. There is a high likelihood that proprietary state-of-the-art embedder models of these companies will perform well on semantic search, and thus, we see some potential in implementing an interchangeable AI component to satisfy different needs in our customers.

Figure 7. BUGLE v3.0.0 Overview

References

Ashish Vaswani, N. S. (2017). Attention is All You Need. Advances in Neural Information Processing Systems, 15. Retrieved from [1706.03762] Attention Is All You Need – arXiv.org

Holdsworth, J. (2024, June 6). What is NLP? (IBM) Retrieved June 23, 2024, from What Is NLP (Natural Language Processing)? | IBM

Jacob Devlin, M.-W. C. (2019, June). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. Minneapolis, Minnesota: Association for Computational Linguistics. BERT: Pre-training of Deep Bidirectional Transformers for Language …

MSV, J. (2017, August 8). In The Era Of Artificial Intelligence, GPUs Are The New CPUs. Retrieved from Forbes. https://www.forbes.com/sites/janakirammsv/2017/08/07/in-the-era-of-artificial-intelligence-gpus-are-the-new-cpus

Figure 8. Hit Rate vs Token Size 10 and 15 Percent Removal

Figure 9. Hit Rate vs Token Size 20 and 25 Percent Removal

Figure 10. Hit Rate vs Token Size 30 and 35 Percent Removal

Figure 11. Hit Rate vs Token Size 40 and 45 Percent Removal

Recent Comments